Part 2: API Gateway and Mutual TLS

In part 1 of the series we discussed how federated security helps secure access to protected resources and eliminates the need to present secret credentials on each and every user request. In this part 2, we will go through using issued tokens in API gateway for both calling backend services and subscribing to notifications. But before we dive into details, let’s review what API gateway is and why it is a vital part of any distributed architecture, regardless if it is a cloud or on premise.

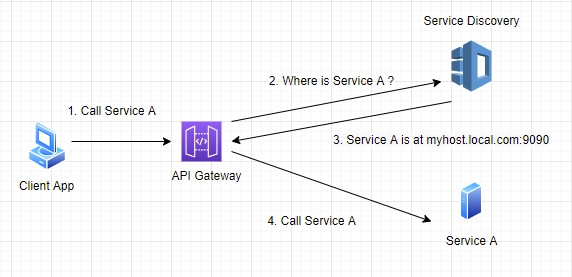

In a traditional 3 tier application, clients make calls to server APIs which in turn persist or read data from DB and return it back to the caller. As the numbers of servers grow, clients need to know at which address the services are located. This is a solved using various discovery mechanisms where each service on startup registers its IP address and port. Clients are able to dynamically discover services and call their APIs, however client applications are coupled to the services as they know each and every service physical address. Another problem is that every service must use authentication and authorization components in order to execute or refuse client requests.

Figure 1:Direct client to server invocation with service discovery

API gateway offers solution to these issues and introduces several advantages over direct client to services communication. Once you have a single point through which all client communication flows, you can implement authentication and authorization in a single location, making backend services code business oriented only. API gateway connects to the same discovery mechanisms to discover services location, but now the services may reside in other LAN or even in another data center on the other side of the globe, and the client is not aware of any of these physical locations – all it knows is the address of the API gateway.

Figure 2: Service invocation through API gateway

Additional important aspect of API gateway is protocol bridging and this is a key requirement when working with legacy systems. Client applications usually use REST API to contact API gateway which in turn can use gRPC, Web Services, sockets and proprietary protocols to call backend services and return the results back as JSON over HTTP.

When the throughput on the API gateway increases, it is easy to add caching layer and use read-through and write-behind caching patterns to minimize the time it takes to retrieve resources from backend services or from various DBs.

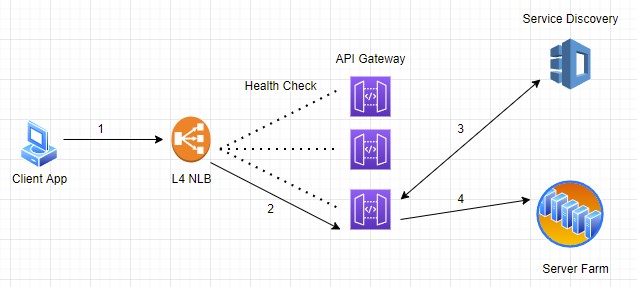

Some of the keen readers might think: “if all the traffic goes through one component in the system isn’t it a performance bottleneck and a single point of failure?” The answer depends on the way you implement and deploy your API gateway instances:

- API Gateway deployed in multiple instances and to easily achieve this they must be stateless.

- API Gateway deployed behind L4 NLB (Network Load Balancer) which redistributes packet traffic using round-robin algorithm and probes API gateway instances for their health status before forwarding any traffic to it.

Figure 3: Service invocation through NLB and API gateway

Some of the other advantages of API gateway that I am not going to cover extensively are:

- Query aggregation – the ability to query multiple backend services in parallel and return combined data back to the clients.

- Traffic shaping – the ability to route client requests to different service instances based on predefined policies, for example, when trying new features and exposing only 20% of traffic from client requests.

- Retries and circuit breakers

- Logging, distributed tracing and monitoring – the observability trio

- Rate limiting

- BFF (Backend for Frontend) – API gateway per client application type (mobile, web, etc)

Most API gateway implementations work as L7 load balancer by inspecting query parameters or headers and forwarding HTTP requests to backend servers. In our case, we needed a custom solution which exposes REST, GraphQL and Web sockets interfaces but communicate with our in-house discovery and service bus using proprietary protocols.

Enough of the overview, let’s get back to our issued tokens and how API gateway uses them to authenticate and authorize user requests.

After successfully authenticating user login request, the authorization server encodes claims into issued token and then client application uses that token to call API gateway. Standard user claims contains system user id, user roles and user agency. Once request arrives, API gateway validates issued token and extracts claims from it. Validation steps include checking token signatures, audience, issuer and token lifetime values. All this done in stateless manner and without calling authorization server (all is needed is the public key portion of security key used to sign the token). User claims contain all information needed to decide if client can access specific resource and once those claims are authorized, the API gateway discovers backend server address, initializes a communication channel using technology suitable for that service and makes an API call (or sometimes several API calls to several servers) and sends the response back to the caller.

Calling API to get resources is only part of the equation. How can we use the same tokens to subscribe to server published notifications? Unlike API calls which are stateless and opens and closes connections upon each call, when subscribing to notifications, client creates a secure channel to the API gateway, usually using Web Socket protocol, and API gateway pushes notifications published by backend services through that secure channel. The initial connection of the Web Socket client authenticated and authorized the same way API call is authenticated and authorized. Then API gateway examines token expiration time and registers a timer for evicting subscribed clients whose tokens are expired. It is a client application responsibility to renew token on the open web socket connection long enough before token expires. This way the connection stays open as long as client application is running and tokens renewed seamlessly on the same connection.

As mentioned before, C-Insight is a multi-agency platform, meaning that user belong to the agency allowed to operate only on entities belong to that agency, and API gateway dispatches notification by observing user agency id claim and forwarding the message only to subscribers that allowed to receive it.

In the last part of the series, we are going to discuss how we connected on premise sensor systems running on the edge to services running in cloud data centers in a secure and highly performant way.